Simple Chat

Before you begin

In order to use the features in this section you need to have an active Spojit account. If you don't have an account you can checkout out the pricing and register here. If you already have an account you can login here.

Our simple chat service allows users to integrate Large Language Models (LLMs) into their workflows, enabling them to generate responses to system messages and human input. By selecting from a range of popular LLMs, users can customize the conversational experience to suit their specific needs and preferences. Our simple configuration makes it easy to get started with integrating LLM-powered chat functionality into your application or service.

To get started, add LLM Chat to your canvas and start configuring it by adding your chosen provider and then adding your configuration variables.

Provider¶

Choose your provider in the authorization section of the service.

Tip

Using different models will result in different results

Configuration¶

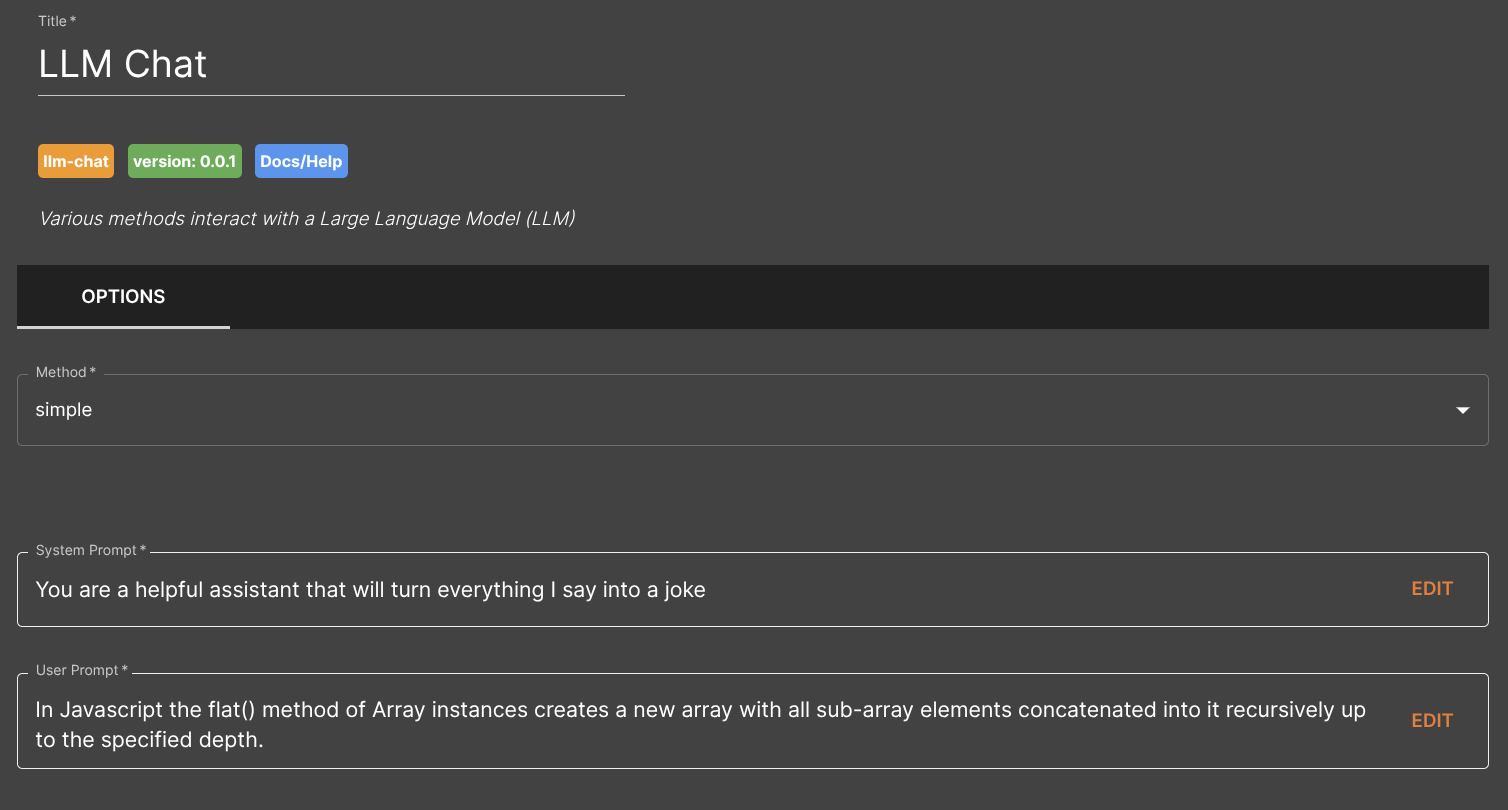

Fill out the following configuration prior to running the workflow:

| Option | Description | Default | Required |

|---|---|---|---|

| Method | Select "simple". | - | TRUE |

| System Prompt | The AI's overall behavior and role. | You are a helpful assistant. | TRUE |

| User Prompt | Provides specific instructions or questions for a particular task or interaction. | - | TRUE |

Example configuration and mapping

The following example shows you how to add a system and user prompt:

The simple chat service doesn't require any service data setup.

In this example the following output will be generated automatically by this service after it is run:

{

"data": "Why did the JavaScript developer's flat method go to therapy? Because it was struggling to get to the root of its problems! (get it?)",

"metadata": {

"usage": {

"input_tokens": 61,

"output_tokens": 30,

"total_tokens": 91

}

}

}

The data node will have the content generated by the model while the metadata will show the model usage.